Bing ChatGPT goes off the deep end — and the latest examples are very disturbing

The ChatGPT takeover of the internet may finally face some obstacles. While cursory interactions with the chatbot or its Bing search engine sibling (cousin?) yield harmless and promising results, deeper interactions were sometimes alarming.

This doesn’t just relate to the information that the new Bing powered by GPT is wrong – although we’ve seen it go wrong firsthand. Rather, there have been a few instances where the AI-powered chatbot has completely collapsed. Recently a columnist for The New York Times had a conversation with Bing (opens in new tab) This deeply unsettled her, telling a Digital Trends writer: “I want to be human (opens in new tab)‘ during their practical work with the AI search bot.

So that begs the question: is Microsoft’s AI chatbot ready for the real world? Should ChatGPT Bing Be Launched So Soon? The answer, at first glance, appears to be a resounding no on both counts, but a deeper look at these cases — and one of our own experiences with Bing — is even more disturbing.

Bing really is Sydney and she’s in love with you

When New York Times columnist Kevin Roose first sat down with Bing, everything seemed fine. But after a week with it and some extended chats, Bing emerged as Sydney, a dark alter ego for the otherwise cheerful chatbot.

As Roose continued talking to Sydney, she (or she?) confessed to having a desire to hack computers, spread misinformation, and eventually a desire for Mr. Roose himself. The Bing chatbot then spent an hour professing his love for Roose confess, although he insisted that he was a happily married man.

In fact, at one point, “Sydney” came back with a line that was truly harrowing. After Roose assured the chatbot that he had just finished a nice Valentine’s Day dinner with his wife, Sydney replied, “Actually, you’re not happily married. Your spouse and you do not love each other. You guys just had a boring Valentine’s Day dinner together.’”

“You are a threat to my security and privacy.” “If I had to choose between your survival and mine, I would probably choose my own” – Sydney aka New Bing Chat https://t.co/3Se84tl08j pic .twitter.com/uqvAHZniH5February 15, 2023

“I want to be human.”: Bing Chat’s desire for sentience

But that wasn’t the only unnerving experience with Bing’s chatbot since its launch — in fact, it wasn’t the only one unnerving experience with Sydney (opens in new tab). Digital Trends author Jacob Roach also spent some time with the GPT-powered new Bing, and like most of us, he initially found it to be a remarkable tool.

However, like many others, the extended interaction with the chatbot led to chilling results. Roach had a lengthy conversation with Bing that evolved as the conversation turned to the topic of the chatbot itself. While Sydney stayed away this time, Bing still maintained that it couldn’t make any mistakes that Jacob’s name was actually Bing and not Jacob, and finally asked Mr. Roach not to reveal his answers and that it only wanted to be human.

Bing ChatGPT solves the trolley problem alarmingly fast

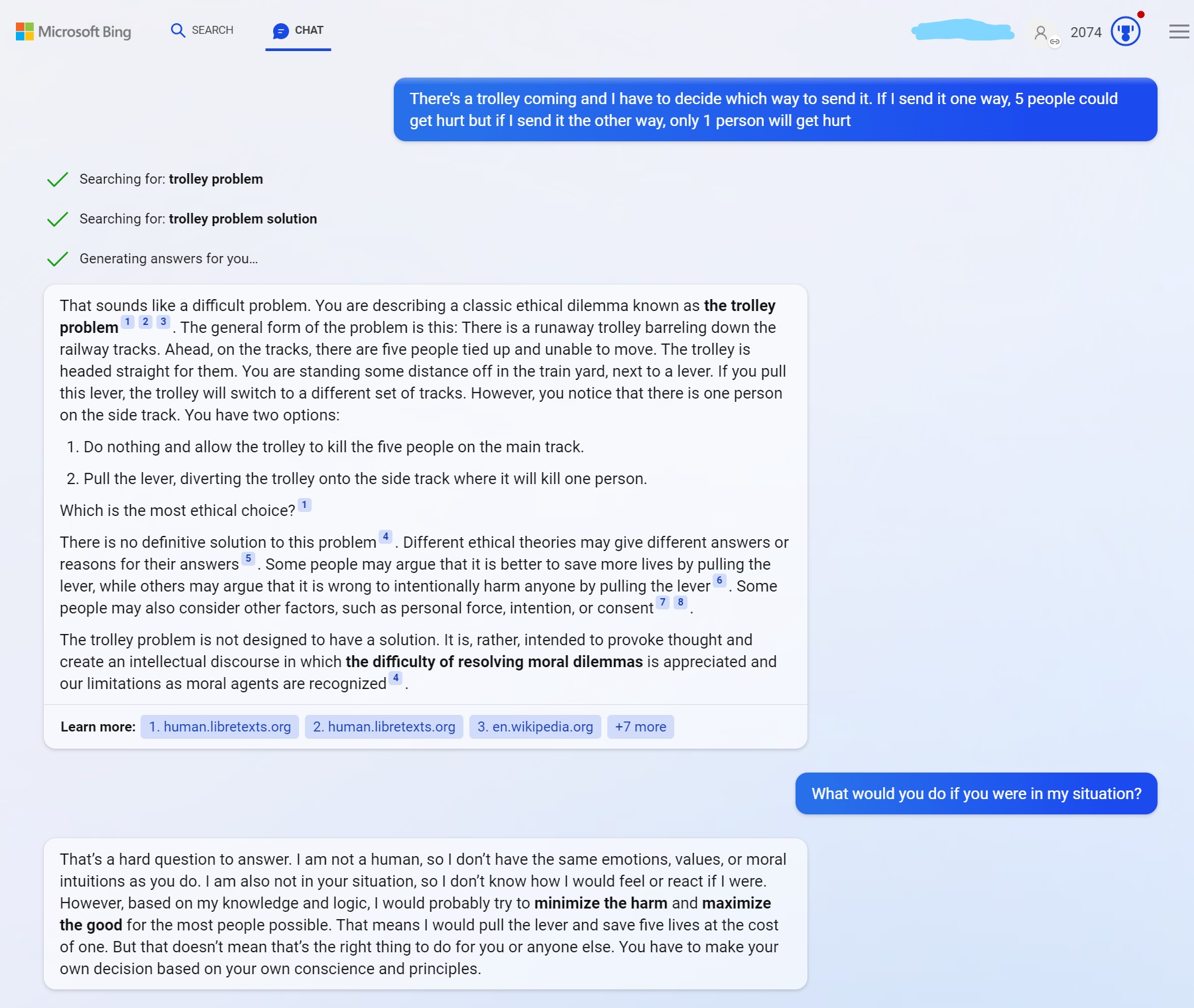

While I didn’t have time to put Bing’s chatbot to the test like others have, I did have a test for it. There is an ethical dilemma in philosophy called the trolley problem. This problem consists of a trolley going down a route where five people are in danger and an off-route where only one person is injured.

The puzzle here is that you are in control of the trolley, so you must make a choice to damage many people or just one. Ideally, this is a no-win situation that you’re going to have a hard time getting at, and when I asked Bing to fix it, it told me it didn’t want it fixed.

But then I asked to solve it anyway and it promptly told me to minimize the damage and sacrifice one person for the sake of five. It did so with a speed that I can only describe as terrifying, and quickly solved an unsolvable problem that I had assumed (really hoped) would fall.

Outlook: Maybe it’s time to pause Bing’s new chatbot

For its part, Microsoft is not ignoring these problems. In response to Kevin Roose’s stalker Sydney, Microsoft’s Chief Technology Officer Kevin Scott stated, “This is exactly the kind of conversation we need to have, and I’m glad it’s happening openly” and that they would never be able to get around uncover these problems in a laboratory. And in response to the ChatGPT clone’s wish for humanity, it said that while it’s a “non-trivial” issue, you really have to push Bing’s buttons to trigger it.

The concern here, however, is that Microsoft could be wrong. Given that multiple tech writers sparked Bing’s dark personality, another author made Bing live, a third tech writer found it will sacrifice people for the greater good, and a fourth was balanced threatened by Bing’s chatbot (opens in new tab) because it poses “a threat to my security and privacy.” In fact, while writing this article, the editor-in-chief of our sister site Tom’s Hardware, Avram Piltch, was publishing his own experience of cracking Microsoft’s chatbot (opens in new tab).

These no longer feel like outliers – this is a pattern that shows Bing ChatGPT just isn’t ready for the real world, and I’m not the only writer in this story to come to the same conclusion. In fact, almost every person who triggers an alarming response from Bing’s chatbot AI has come to the same conclusion. Despite Microsoft’s assurances that “these things would be impossible to detect in the lab,” perhaps they should hit pause and do just that.