How To Calculate Algorithm Efficiency

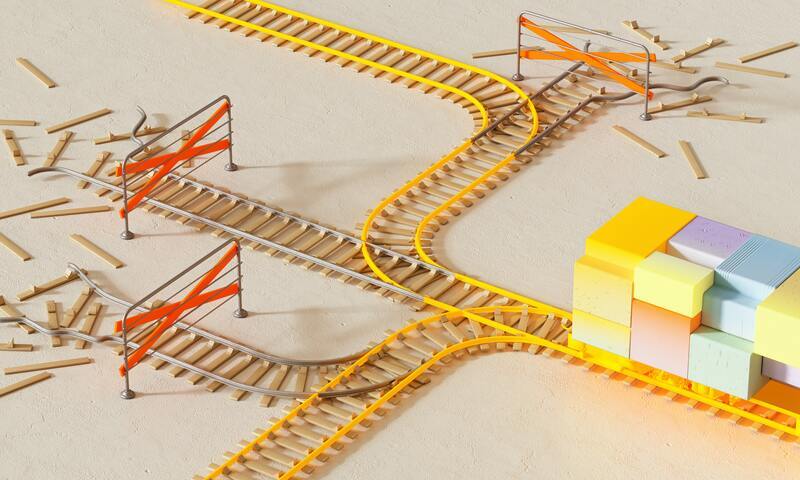

Photo by DeepMind on Unsplash

Almost all forms of technology use algorithms to perform complex functions, from search engines to mobile apps and video games to social media. However, when creating an algorithm, it’s important to make it as streamlined as possible so that it doesn’t put a strain on the software or the device it’s running on.

In this article, we will discuss how algorithm efficiency is calculated, focusing on two main ways to measure it and providing an overview of the calculation process.

Algorithm efficiency refers to how many resources a computer needs to expend to process an algorithm. An algorithm’s efficiency must be determined to ensure that it can run without the risk of crashes or severe delays. If an algorithm is not efficient, it is unlikely to be fit for purpose.

We measure the efficiency of an algorithm by how many resources are used to process it. An efficient algorithm uses minimal resources to perform its functions.

Algorithms can be used for various functions, including machine learning algorithms, protection against cybercrime, and increasing the security of the internet. When surfing the Internet, you should always be aware of how to protect yourself from identity theft and other criminal activities.

This section discusses the two main measures used to calculate algorithm efficiency. These are time complexity and space complexity. However, a direct comparison is not possible; Therefore, we must consider a combination of the two.

space complexity

Simply put, space complexity refers to the amount of memory required during execution of a program versus function input, i.e. the amount of memory on a computer or device that is required. The storage type could include registers, cache, RAM, virtual storage, and secondary storage.

When analyzing space complexity, you need to consider four key factors:

- The memory required to store the code for the algorithm

- The memory required to enter the data

- The memory required to output the data (some algorithms, such as sorting algorithms, do not require memory to output data and usually just rearrange the input data).

- The memory needed to work while the algorithm is being computed. This can include local variables and required stack space.

Mathematically, the space is equal to the sum of the following two components:

- A variable part This includes structured variables that depend on the problem the algorithm is trying to solve.

- A fixed part which is independent of the problem and consists of the command space, the space for constants, fixed-size structure variables, and simple variables.

Therefore, the space complexity S(a) of any algorithm is calculated as follows:

S(a) = c (the fixed part) + v(i) (the variable part that depends on an instance feature i)

temporal complexity

Time complexity is the total time required to run an algorithm, and it depends on the same factors that space complexity uses, but these are broken down into a numerical function.

This measure can be useful when comparing different algorithms, especially when large amounts of data are processed. However, when the amount of data is small, more detailed estimates are required when comparing the performance of algorithms. Time complexity is less effective when an algorithm uses parallel processing.

The time complexity is defined as T(num). It is measured by the number of steps as long as each step corresponds to constant time.

Cases of Algorithm Time Complexity

The complexity of an algorithm is defined by a few key criteria; namely the movement of the data and the comparison of keys – for example, how often the data is moved and the key compared. When measuring the complexity of an algorithm, we use three cases:

- Time complexity at best

- Average case time complexity

- Worst case time complexity

Accurately calculating the efficiency of an algorithm requires going through two phases – theoretical analysis and benchmarking (measuring performance). We will provide a summary of each phase below.

theoretical analysis

Regarding algorithms, theoretical analysis is usually the process of estimating the complexity of an algorithm in an asymptotic way (arbitrarily approximating a value or curve). The most common way to describe the number of resources used by an algorithm is to use Donald Knuth’s Big-O notation.

Using Big-O notation, programmers measure algorithms to ensure they scale efficiently regardless of the size of the input data.

Benchmarking (performance measurement)

When analyzing and testing new algorithms or software, benchmarks are used to measure their performance. This helps measure the efficiency of an algorithm compared to other well-performing algorithms.

Let’s take a sorting algorithm as an example. Using benchmarks set by a previous version of the sorting algorithm can determine how efficient the current algorithm is, using known data while also taking into account its functional improvements.

Benchmarking also allows analysis teams to compare algorithm speed against various other programming languages to see if improvements can be made. In fact, benchmarking can be implemented in a number of ways to measure performance compared to predecessors or similar software.

Implementing a new algorithm can sometimes affect its overall efficiency. This can be due to the programming language chosen, the compiler options used, the operating system, or simply how the algorithm was coded. In particular, the compiler used for a particular language can greatly affect speed.

When measuring spatial and temporal complexity, not everything can be dictated by the programmer. Issues such as cache locality and coherence, data alignment and granularity, multithreading and concurrent multitasking can all affect performance, regardless of the programming language used or the way the algorithm is written.

The processor used to run the software can also cause problems; Some processors may support vector or parallel processing while others may not. In some cases, leveraging such capabilities may not always be possible, making the algorithm less efficient and requiring some reconfiguration.

Finally, the instruction set used by a processor (e.g. ARM or x86-64) can affect how quickly instructions are processed on different devices. This complicates the optimization of compilers due to the number of different hardware combinations that have to be considered.

An algorithm must be processed very quickly, so it must function as error-free as possible. For this reason, each new algorithm goes through tests to calculate its efficiency and uses theoretical analysis and benchmarking to compare it with other algorithms.

Time and space complexity are the two main measures used to calculate algorithm efficiency and determine how many resources are needed on a machine to process them. While time measures how long it takes to process the algorithm, space measures how much memory is used.

Unfortunately, numerous challenges can arise during the implementation process such as the programming language used, the processor, the instruction set, etc. that cause headaches for programmers.

Despite this, time and space complexity have proven to be very effective methods of measuring algorithm efficiency.

Nahla Davis is a software developer and tech writer. Before turning full-time into technical writing, she was – among other fascinating things – a lead programmer at an Inc. 5,000 experience branding organization whose clients include Samsung, Time Warner, Netflix, and Sony.