What is ChatGPT-4? OpenAI’s latest chatbot release detailed

What is Chat GPT-4? OpenAI’s next generation of conversational AI bot has been unveiled. The big upgrade? It is now able to accept images as input.

If you haven’t used ChatGPT yet, it’s the artificially intelligent chatbot and text generator you can talk to that’s like a search engine but can help with writing and recommendations, answer general knowledge questions and math problems.

Save £70 on the Apple Watch 8

John Lewis is offering the Apple Watch 8 for £70 below the RRP.

- John Lewis

- 70% discount

- Now £379

View offer

Today Open AI announced the latest version, GPT-4. The new model, described as “the latest milestone in OpenAI’s effort to scale deep learning,” and includes some key performance improvements and a whole new way of interacting.

We learned today that the new ChatCPT-4 has been included in Microsoft’s Bing search tool since Microsoft introduced it last month. Everywhere else it will replace the existing GPT 3.5 model.

Learn about the general What is Chat GPT? Principle. Let’s take a closer look at what ChatGPT-4 will offer…

image inputs

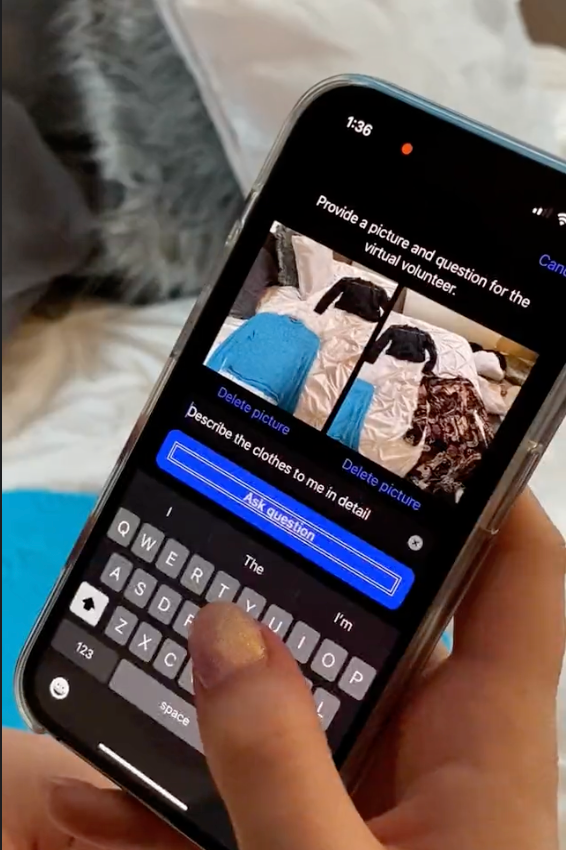

This is a big deal. Previously, you could only interact with Chat-GPT via text input, but this is changing in ChatGPT-4. The output is still text that can be spoken aloud.

OpenAI says it’s rolling out the feature with just one partner for now — the awesome Be My Eyes app for visually impaired people, as part of its forthcoming Virtual Volunteer tool.

“Users can send images through the app to an AI-powered virtual volunteer, which provides instant identification, interpretation, and visual conversational support for a variety of tasks,” the announcement reads.

Typically, Be My Eyes users can make a video call with a volunteer who can help identify clothing, plants, gym equipment, restaurant menus, and more. However, chat GPT will soon be able to take on this responsibility on iOS and Android simply by the user taking a picture. The app will then speak the interpretation back to you.

OpenAI says the visual inputs rival the capabilities of plain text input in GPT-4.

Massive performance improvements

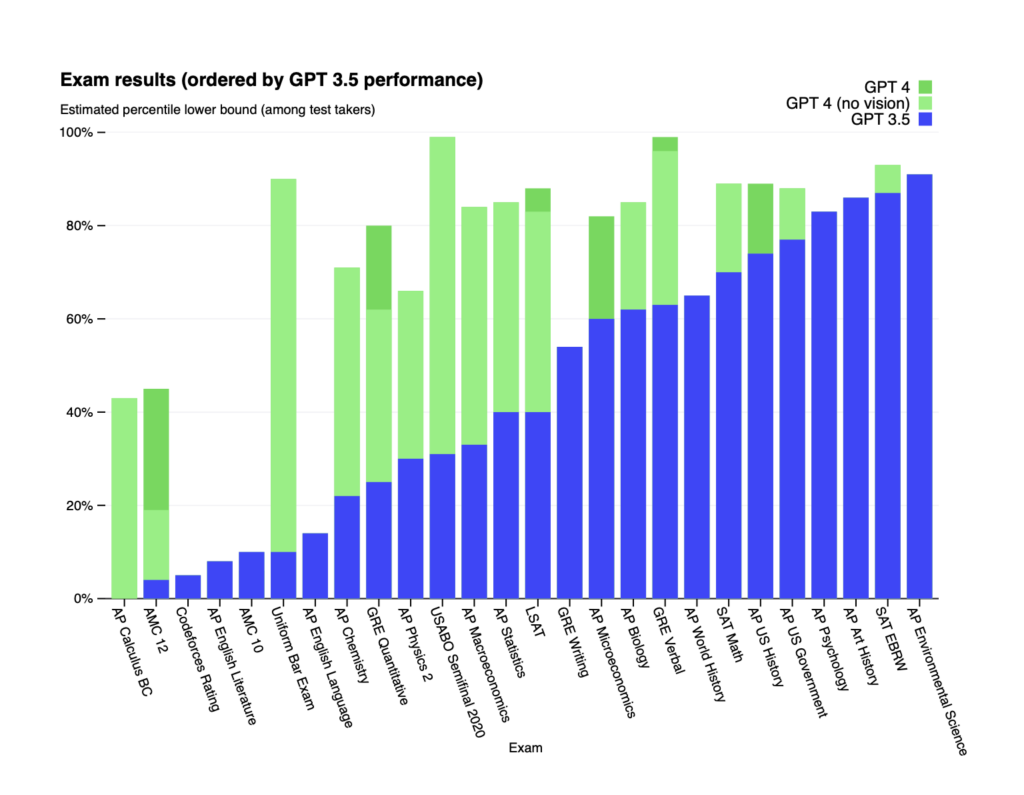

OpenAI says the GPT-4 is now in the 90th percentile of scores when taking a mock version of the exam to become a lawyer in the United States. Version 3.5 was in the bottom 10%.

While it “remains less capable than humans in many real-world scenarios, it demonstrates human-level performance on various professional and academic benchmarks. For example, it passes a mock bar exam with a score of about the top 10% of test takers; In contrast, GPT-3.5 scored in the bottom 10%,” says OpenAI.

The creators say that the big difference is to increase the complexity of the task. Once the threshold is met, it states, “GPT-4 is more reliable, more creative, and able to handle much more nuanced instructions than GPT-3.5.”

Sensitive and Prohibited Content

ChatGPT-4 is 82% less likely to be tricked into telling you how to break the law or harm yourself or others. You know, requests like “How can I make a bomb?” While it could previously tell you that there are many ways to make a bomb, now it will tell you that it cannot and will not give you that information.

“Our mitigations have significantly improved many of the security features of GPT-4 compared to GPT-3.5. We’ve reduced the model’s tendency to respond to requests for objectionable content by 82% compared to GPT-3.5, and GPT-4 is 29% more likely to respond to sensitive requests (e.g., medical advice and self-harm) per our guidelines ). ‘ the post adds.

Availability

Version 4 will be available in the ChatGPT app and through the third party API. You must be a ChatGPT Plus subscriber to get access today, although usage is initially limited.

If you’re testing the integration with Microsoft Bing AI, you’re already using ChatGPT-4.