How Photographers Will Benefit from Intel and NVIDIA’s Latest Tech

![]()

Intel recently announced new Xeon W-3400 and W-2400 processors for professional workstations. At the same time, NVIDIA announced its latest RTX 6000 Ada-generation GPUs. It’s the first time in years that Intel and NVIDIA, longtime partners, announced new flagship products simultaneously.

The new platforms, and the workstations built around them, are extremely exciting for a wide range of prosumer and professional users, including data scientists, artists, photographers, and videographers. The platforms are on the bleeding edge of technology, introducing significant performance gains and more expandability than ever.

PetaPixel recently chatted with Roger Chandler, GP and GM of Creator and Workstation Solutions in the Client Computing Group at Intel, and Bob Pette, the VP of Professional Visualization at NVIDIA, to discuss the latest Xeon and NVIDIA products, their key features, and how they fit into professional workflows.

What are the primary benefits of the Intel Xeon W-2400 and W-3400 family of processors, the W790 chipset, and NVIDIA’s latest Ada-generation GPUs for professional photographers and videographers?

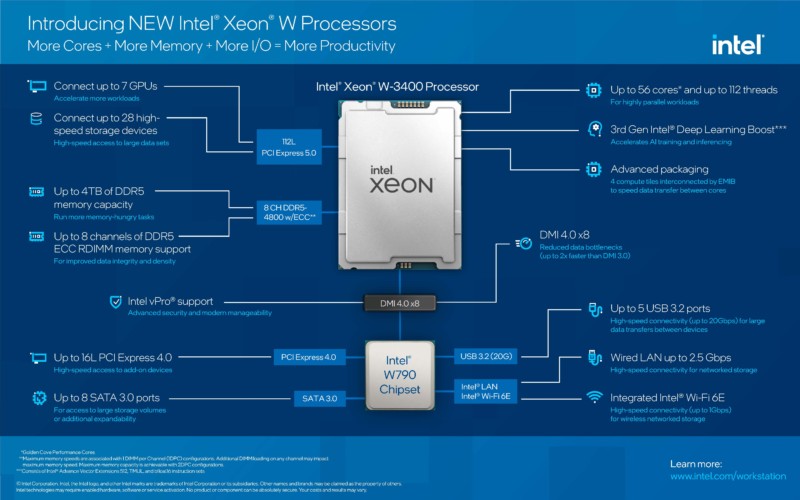

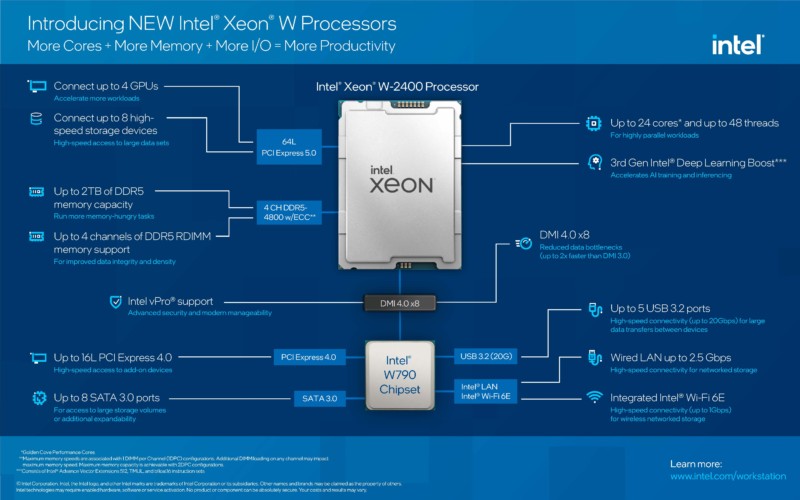

Roger Chandler: We’re representing a full platform, which is great. Users buy platforms, run workloads, and have complicated workflows. From the Intel perspective, we recently announced our new Xeon W-3400 to W-2400 product lines. These products are a step-function improvement across the board and across so many features and capabilities.

It’s been a few years since we’ve updated this kind of professional workstation class of products. I’ll walk through some of the key features and how that’s relevant to specific use cases, and then I’ll hand over to Bob and he can walk through the same thing on the NVIDIA side.

In the platform itself, there’s the CPU, and the CPU works in tandem with the GPU and the rest of the platform to drive the workflow and be the heart of the system, so to speak. With our new platforms, we’ve doubled the number of cores you can put on a single socket. This is extremely relevant for rendering workloads and anything that’s highly multi-threaded. We’ve increased the frequency and the individual performance of each of those cores by about 30%. This is really important because a lot of photo editing workloads are heavily dependent upon individual core performance. Not only have we doubled the overall number of cores, we’ve increased the overall performance in each individual core based on our Sapphire Rapids architecture. It’s a breakthrough architecture.

Note: Sapphire Rapids is the codename for Intel’s fourth-generation Xeon workstation processors.

We’ve also increased the memory and the overall cache size. We’ve upgraded to DDR5 memory, and you can put up four terabytes of memory into one of these systems. This is really important for folks who are dealing with extremely complex images and 3D designers who are creating 3D environments that are massive datasets. We’re seeing a lot of traction in the market for workstations for data scientists because they need big, beefy workstations to do training and inferencing on the same system. They’re dealing with tremendous datasets, so increasing the memory footprint is important. We’re seeing up to a 75% improvement in a lot of these traditional data science workloads because of these changes.

We’ve also got 3rd Gen Deep Learning Boost, which is meant to speed up inferencing frameworks. There are a number of other overall architecture improvements. Also, what we’ve done is increase the number of PCIe Gen 5 lanes, so that increases the overall bandwidth and throughput of data. There are up to 112 lanes of PCIe 5.0 in these systems. What this opens up is the opportunity for users to expand the system with high-speed storage or GPUs. This is a market where customers like to put a lot of GPUs into their workstations.

I’ll hand it over to Bob here. What we’re trying to do here is expand options for customizable systems where folks can build extremely powerful platforms.

Bob Pette: We too like to look at the whole system, so we got really excited about Sapphire Rapids a while ago. Unlocking the amount of bandwidth required for a multi-GPU system requires something like the Intel Xeon W. You need to have enough lanes and cores to feed these things.

The reason why large-GPU and multi-GPU systems are so important is that artificial intelligence (AI) is changing the way people are working. AI is in a lot of the codes in photo and video editing apps and rendering apps. Being able to feed more GPUs helps with the rendering, as Roger said, and helps with our metaverse push and omniverse product. Most importantly, it helps our customers get more done.

It’s a very balanced system. It’s the first time that mainstream providers will have four-GPU workstations, something you used to have to go to the data center for. People can essentially have a supercomputer sitting at their desk.

![]()

Our RTX 6000 Ada has twice the performance of its predecessor, updated Tensor cores for AI, updated shader cores, and compute cores. It basically delivers twice the graphics rendering and AI performance.

The beauty of Sapphire Rapids and Ada together, besides the fact that there are a lot of things that run on the GPU and don’t run on the CPU and vice versa, that it will essentially accelerate every code that people use. You have codes that maybe don’t take advantage of the GPU that are going to run faster because of the things Roger just talked about. Things that do take advantage of the CPU and GPU will run faster because it can be fed. The fact you can put more GPUs in the system means that the data scientist, artist, or video editor can get more done. We’ve packed all this performance into essentially the same power envelope.

For your area in particular, the Ada GPUs also have additional encode and decode onboard, enabling multiple 8K video streams to be fed off of disk. The power video editor will have a field day with apps like DaVinci Resolve, Premiere Pro, and the like.

How many of the performance gains you’ve described are realized independently of any changes to software code, and how much work must software developers do to ensure that their applications are fully realizing the possible gains?

Chandler: Intel and NVIDIA have partnered with some of these leading software developers, like Adobe, Blackmagic, Corel, you name it, for decades. Whenever you introduce a new architecture with new features, there is work that you try to do as elegantly as possible so that the code can just naturally take advantage of things. But when you’re doing disruptive things, like doubling the core count, some workloads scale naturally and others much be changed to take advantage of this.

We have people who partner with software developers at the business level and engineers who partner with them at the engineering level. As the development process is going, we work with their engineers to help them find where there could be bottlenecks, or where we could get more performance based on a new instruction set or the new dynamic in the platform itself.

Unless you’re just increasing the overall frequency, or sometimes just increasing the memory size, you have to do the work. When you’re changing the architecture, you have to do the work. We do extensive work with our partners on this.

Some applications get a natural benefit on Sapphire Rapids, but generally speaking, it takes work. It doesn’t always show up at launch. It’s kind of cool to see that the performance is great at launch, but then after six months you can actually see demonstrable performance improvements later because the applications continue to get optimized. That’s exciting and we like to keep a steady drumbeat of this so that folks know that while they bought their workstation for this, it’s actually getting better over time. It’s a cool value proposition.

Pette: Similar to Roger, we’ve got an extensive group that works with our partners. The whole value proposition is that “it just works,” so we work with partners in advance of shipping new GPUs, as does Intel.

In the case of GPU programming, it’s been fairly stable. If you’re using DirectX or Vulkan, you automatically get the benefit. There’s a class of what I’ll call CPU applications, although they’re not really CPU applications, that we saw immediate benefit with Sapphire Rapids. Immediate benefit because the extra cores provided more throughput. They may be able to take advantage of other features and get further improvements, but we were seeing 50% to 200% improvement without changing the code. I was pleasantly surprised by the immediate benefit that the extra core count gave to apps that theoretically were throttled.

![]()

With the additional work that we do, the goal is a balanced system. It’s a problem if the GPU can’t keep up with the CPU or vice versa, or the networking doesn’t keep up with both, or the drives are slow, so we tend to look most at a balanced system. With situations like video editing, you’re pulling terabytes off a disk, or network-attached storage, so we put the system through the paces and try to identify any gap that needs to be closed off. Maybe we need to upgrade a networking driver or talk to partners about using flash storage because the performance gains in their apps aren’t being realized.

The cool thing is that we didn’t see anything that didn’t experience an immediate benefit. As we move forward, those things that benefit will grow.

Chandler: In this particular segment, there’s really a deep focus on the user, the experience, and the workflow. There are individual apps and workflows, but when you look at complicated workloads, users are using four, five, or six different applications that all have to work together at once.

Intel and NVIDIA have partnered for years. There are so many fantastic platforms that have Intel CPUs and NVIDIA GPUs out there. Often, as we go through this, we’re looking at the full workflow from a platform perspective. If Bob’s team finds issues, they make sure we’re aware, and vice versa. We have a good engineering relationship. It’s all about delivering a full platform for complex workflows.

The other part of this segment is that users are extremely mindful of stability. Users make a big investment and use the machines for years, so we make sure that all checks out too.

Pette: I want to add onto the multi-app comment. That’s a very good point because nobody runs one app. All apps need computing power and threads from respective processors. Imagine running Premiere Pro, Photoshop, and DaVinci at the same time. That, without any code changes, is going to run faster because it won’t be bottlenecked by where to run the data through. It’s really a huge benefit for our customers.

Chandler: One other thing on these complex workflows. They’re complex, but also these particular systems are generally used by professionals in professional settings, so there’s this kind of IT build going on in the background. We check out all that stuff too, and consider the typical environment for the user. You unpeel that onion and it takes a lot of work. It’s a big focus of our engineers to make sure that when this workstation is out there, we’ve checked it all out.

You emphasized that these workstations are machines that people will be using for an extended period. Can you speak to the expandability of the system? If people are using the workstation four or five years down the road, how responsive is the entire platform to people’s changing needs?

Pette: From my side, and this is probably a question better suited to the original equipment manufacturers (OEM), we talk about future-proofing. There’s some performance that people may not get to in these collective systems until a year or two down the road. And it’s going to come fast. AI keeps getting into more and more codes.

One way to deal with these changes is with software updates and driver updates. We can tweak and tune the GPU and CPU alike to get more and more out of the system. As system builders start building more Gen 5 devices, people can get better performance. People can grow with more performant storage and network-attached stuff. Users can add more GPUs and add more memory.

We typically see that people are on a three-to-five-year refresh cycle in the workstation business. That’s more from a financial model than from a “need.” Say you’re a prosumer. Three years from now, it’s still going to be an awesome system. If you got one with a single CPU and GPU today, the codes will change over time to improve. If you need additional performance, you can stick another CPU or GPU into it.

I’m so excited because this is the first time in about four years where the rollout of our flagship GPU product aligned with the rollout of Intel’s flagship CPU. It’s been a while. We’ve stagger-stepped, and now, boom, customers have the best CPU on the market and the best GPU on the market in the same system, at the same time. I think it’s really going to wonders for the productivity of our customers.

Chandler: Feature-wise, we specifically chose the absolute bleeding edge, state-of-the-art capabilities, like DDR5 that’s super-fast and highly expandable, PCIe 5.0, this is the state of the art.

When you look at the systems that the OEMs are building, they’re definitely future-proofing a lot of these systems. And it’s not just about expanding the individual system, it’s also about the future of the workloads. You’re starting to see these hybrid workloads where you’ll have multiple people in one location that want to access an individual system. You want to distribute that compute somehow. Being able to rack-mount can account for different workflows. We want to support that.

There are going to be systems shipping that three years ago, I never would have believed that level of compute power could be available in a single system. It’s crazy cool. I think it’s going to really set the stage for the next four or five years.

This platform seems especially appealing for users who will be editing multiple streams of 8K RAW footage. That’s so heavily demanding that even last year’s best computers could struggle at times. These machines sound well-equipped to deal with high-res video editing.

Pette: That’s one of strong use cases in general. Imagine a workstation with dual Xeon W’s, four Ada 6000’s, four TB of DDR5 memory, flash drives, it is a supercomputer at your desk.

You’re going to need that. People want 8K and 12K. There’s AI processing in that, so you need that compute power. That’s something else these machines future-proof for as more of these tools add AI and as video streams get larger and denser.

Chandler: We’ve all come out of this world changing societal shift from COVID where everything was digital. Some of the effects of that is the increased pressure on creators. How do we create Hollywood-class content in a distributed way, how do we use in-camera visual effects, how do we do these things remotely? You’ve seen this increased focus on digital creation. It accelereated well beyond where we thought it was going to get to, which increased the need for computing.

![]()

When you look at how things have evolved on the content creation side, now everyone’s a creator. It’s easy to say that this thing is future-proof for five or six years, but seeing how fast everything has grown, it’s really needed now. I’m an optimist but I’m still surprised by how positive the collective response has been to these new platforms. It’s pretty exciting.

Pette: Yeah, and the OEMs are excited as well. They all are salivating. They all have designed these gorgeous systems and they’re all looking to take share. It’s the first time in a while that NVIDIA and Intel have come together at that same point, and that same point that OEMs, with assurances from us, can design a whole new system as well. I can’t wait to see what some of our customers will do with it because they will blow us away. We know what our platform is capable of, but they’re going to exceed that.

So many of my peers, myself included, use the latest M1- and M2-powered Macs. But when you’re talking about expandability and versatility, and being able to create a workstation tailored to your specific needs, it seems like there’s much more freedom and options offered by the Intel platforms.

Chandler: Exactly. You’ll see workstation designs built for more compute power, so maybe they have a couple of Xeons and a single GPU. But you’ll also see designs with a lower core-count Xeon and load that up with GPUs, because maybe the CPU-intensive aspects of the workload aren’t as critical to them. They have the choice.

We have a whole portfolio because this is a complex ecosystem, a complex market, and workloads are different. Our users also know what they need, so now they have options, which I think is great.

![]()

Pette: Yeah, there’s not a prescriptive one-to-one. And there’s not just two options either. You pick the right size and number of CPUs, and pick the right size and number of GPUs. We collectively help our customers figure that out.

In the case of others, it’s this or this, and one size does not fit all.

Chandler: Both NVIDIA and Intel deeply respect the do-it-yourself (DIY) market. They’re the folks who like to take these on their own and do things with it. What we did with the Xeon W, for the first time, is offer unlocked SKUs. Overclockers can take this and do some crazy things. We’ve already seen given some overclockers some samples.

You can buy something off-the-shelf from an OEM or build your own too. The scalability is going to ignite a lot of innovation.

Since you supply components to OEM manufacturers, the companies building and selling off-the-shelf workstations, do you believe that the competition this breeds between makers results in a better experience for the end-user?

Chandler: I think so. We’re committed to big investments in open-source, and big investments into DIY.

When you provide a lot of options, you have to provide a lot of insight into what option is best for what, otherwise folks can get lost in the choices. It’s important to understand user needs.

Anytime innovation occurs, anywhere in the ecosystem, it inspires everyone else. And that’s a good thing, from my perspective.

Pette: Absolutely. We treat everybody fairly and want everyone to succeed, whether it’s the large OEMs or the tier twos, or the DIY’ers.

We purposely test these things and do private reviews on our own to make sure they all can be successful. In some cases, we might need to suggest driver changes or suggest some hardware configurations or things like that.

![]()

As I said, the OEMs are really excited about this. They got extremely creative. They got aggressive, thankfully, in what they’re going to enable with these new processors. Any competition in general encourages people to think out of the box, be a bit more creative, and squeeze out that last ounce of performance. It absolutely helps.

Primary Takeaways for Photographers

We already had a good idea about how powerful Intel’s new Xeon W-3400 and W-2400 CPUs are and we knew about the massive performance gains promised by NVIDIA’s latest RTX 6000 Ada-generation GPU, too. However, it was informative to hear directly from Intel and NVIDIA about how excited the two companies are about their new products and learn more about the evolving nature of new platform architecture.

Even though the performance gains at launch are significant for a wide range of users, including heavy photo and video editing, it’s interesting to consider how additional changes to code by software developers and new firmware updates will likely extract even more performance out of the new hardware platform.

Where do Intel and NVIDIA stand in the age-old Mac vs. PC debate? Both companies, of course, have had long relationships with Apple, although one that has rapidly changed with the advent of Apple’s silicon development and M1- and M2-series chips.s These chips remove Intel and NVIDIA from the equation altogether with Apple-built CPUs and GPUs. While Chandler and Pette weren’t interested in attacking Apple directly, they were keen to highlight the benefits of a more open system.

![]()

Since Intel and NVIDIA work with and sell components to companies that then design and build workstation computers, there are many options for customers on the market. Intel and NVIDIA also support DIY customers, meaning individual users can build machines to meet their needs precisely. While a company like Apple considers the needs of its customers, too, its relatively closed system limits the options presented to an individual customer.

There are benefits to Apple’s approach, of course, including extreme control over product quality and stability. On the other hand, it was interesting to learn more about how hands-on Intel and NVIDIA are with their partners, big and small. Intel and NVIDIA engineers take a hands-on approach, checking in with OEMs to ensure they have what they need and that everything they’re designing works as expected. There’s a heavy emphasis on stability, as workstation users cannot afford to experience issues and downtime.

Ultimately, flexibility is what Intel and NVIDIA-powered workstations offer customers more than anything, even more than supercomputer-like performance at a desk. Computer makers are enabled to innovate and succeed, and customers have many more choices. Not only do prosumers and professionals have more options right when they purchase a new workstation computer, but they also have many ways to upgrade their machines over time to adapt to changing needs.

Image credits: Intel, NVIDIA